Lei Feng network press: Zhang Junlin, author of this article, mainly introduces three kinds of semantic search modes based on deep RNN.

This article discusses how to use a deep learning system to construct a semantic search engine. The so-called semantic search here refers to the semantic level matching between user queries and search web pages. For example, the user inputs “Iphoneâ€, although an article refers to “Apple is trying to make a new type of mobile phoneâ€, but There is no clear statement of the iphone, so even if so, this article can be found out. Traditional search engines are powerless for this situation because they are basically based on literal matching as a basis for sorting. No results of literal matching will not be searched, even if the two are semantically related. No way.

Search engines usually use it, so this does not have to be said. But from a technical point of view, what is the search? This needs a brief explanation. The so-called search, that is to say we have assuming there are 1 billion web pages, forming a document collection D, the user wants to find a message, send a user query Query to the search engine. The search engine essentially calculates the degree of correlation between each page in the document collection D and the user query Query. The more relevant the rows are in the top of the search results, the search results are formed.

So in essence the search is for two pieces of information: Query and a document Di, and calculate the degree of correlation between the two. The traditional method is to determine the degree of coincidence between the two (in this article we do not consider the link relationship and other factors, purely consider the text matching angle), such as TF.IDF ah, whether the query word appears in the title, etc. .

In other words, for the search, it can be transformed into a sentence-to-match problem, that is, the search problem can be understood as follows:

This means that given a user query and an article, a mapping function is used to give a judgment of whether the two are related or not, or to give a classification result with different degrees of relevance from 1 to 5, with 1 being irrelevant, 5 The delegates are very relevant:

That is to say, given Doc and Query, the neural network is used to construct mapping functions and mapped into 1 to 5 points of space.

We have previously summarized several common RNN-based network structures for sentence matching problems. This paper presents a neural network structure that treats the search problem as a typical sentence matching problem and treats it as a special sentence matching problem. We can call this semantic search neural network structure based on RNN NeuralSearch structure. From the perspective of my reading horizon, I have seen CNN search problems and have not yet seen the RNN structure. Of course, in fact, the search for neural networks is relatively insignificant. This may be related to the fact that the query too short is too long. I don't know if the method described here is valid. This article just shared this idea.

Before giving a follow-up RNN structure, we give a very simple and unsupervised semantic search model.

| Simple semantic search model unsupervisedIn fact, if you want to do semantic search through neural networks, there is a very simple way. Here describes its working mechanism.

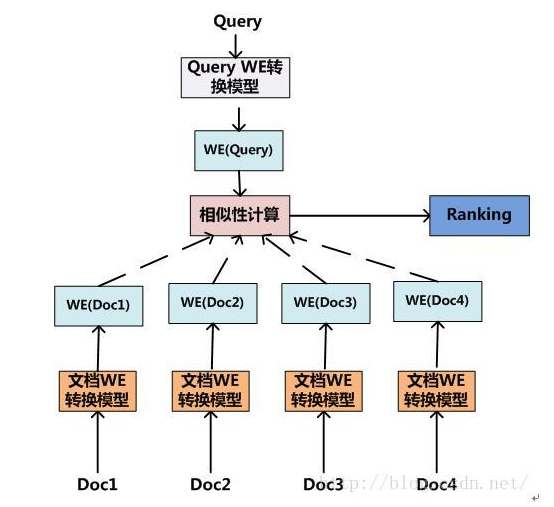

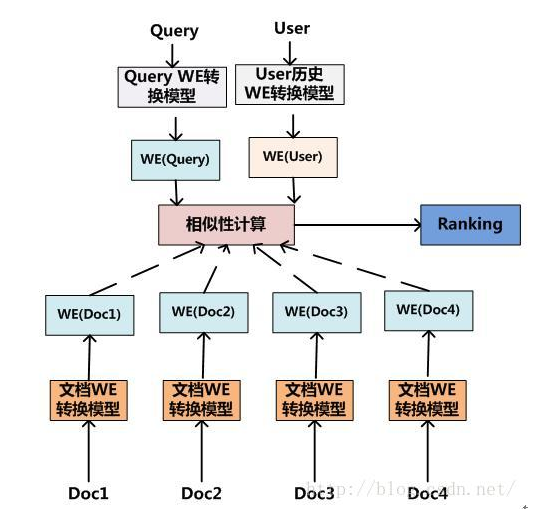

Figure 1 Abstract semantic search structure

Figure 1 shows a model structure of the most abstract neural network for semantic search. Its core idea is to express each page in document set D as a representation of Word Embedding by a certain mapping method. At the same time, the user query Q is also mapped to the Word Embedding expression. In fact, the query and document mapping have been separately mapped here. To the semantic space. Then match the two by the similarity function:

Then according to the similarity score from high to low output to complete the document sorting work, given the search results.

Previously, some work combined with Word Embedding and IR was actually covered in this abstract framework. The difference is nothing but how the Word Embedding representation of the document is mapped from the document's word list (the document WE conversion in Figure 1). Modules are not the same; the Word Embedding representation possible method (the query WE transformation module in Figure 1) is not the same from the user query how to map the query; the similarity calculation function F may also be different.

In this framework, we give a simple and easy way to do semantic search. In the above framework, we only need to do two things:

1. How do I get a Word Embedding representation of a document and how can I get a Word Embedding representation of the query? Word Embedding words directly accumulate or find an average, simple. In fact, the effect of this method is not necessarily bad. In some cases, the direct cumulative effect is quite good, and it is very simple to implement.

2. How does the document and query similarity measure function take? Calculate directly using Cosine.

In this way, a very simple and feasible semantic search system can be constructed. Because it uses the Word Embedding representation, it certainly supports semantic search because even if there are no query words in the document, as long as the document contains words that are close to the query word, their Word Embedding is very similar and can be calculated. Semantic similarity comes.

Sensibly speaking, this unsupervised method can definitely increase the recall rate of the search system because the original unmatched documents can now be matched, but theoretically it is likely to be degraded for search accuracy, so it may be necessary to Traditional methods are used together.

In the abstract structure of Figure 1, in fact, we can easily produce an innovative idea that is to integrate personalized search into a "personalized semantic search engine", the change is very simple, only need to add a user personalized modeling module You can, as shown in Figure 2, only need to personal history of the user through the "user history WE conversion module" to the user personality model can be expressed as Word Embedding form, personalization factors will be integrated into this semantic search system.

Figure 2 Personalized semantic search abstract framework

This structure is as if I have not seen anything mentioned before. It should be a new idea, of course, a simple new idea. And how to build a personalized module may have many different methods, so this structure is also a great article can be made.

| Treating Search Problems as Typical RNN Sentence Matching ProblemsIn the previous article, we summarized from the existing literature the common neural network structures that use RNN to solve the sentence matching problem. Since we regard the search problem as a sentence matching problem, it is entirely possible to apply these models to solve this problem. Of course, directly using RNN to accumulate a document Word Embedding is not necessarily a good idea, but this block can be used to give Word Embedding sub-sentences, and then add other variants, here to simplify the description, using a simple method to describe.

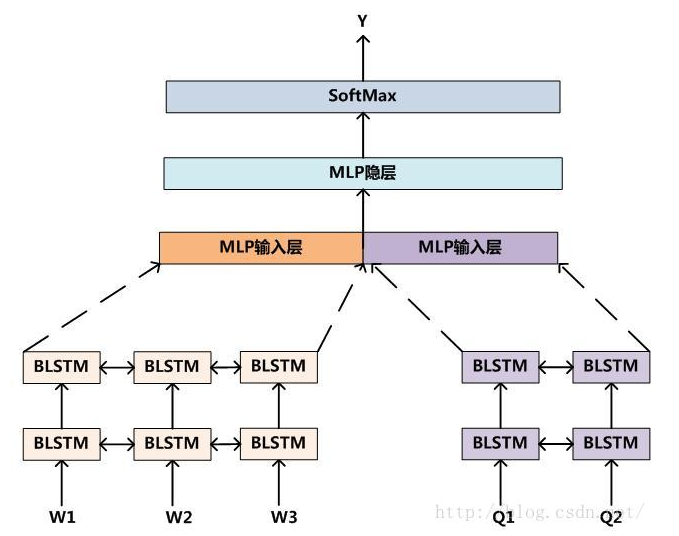

Figure 3 NeuralSearch structure

Figure 3 is the neural network structure of the first possible RNN to solve the semantic search problem. We can call it NeuralSearch Model1. The core idea is: to establish a deep RNN neural network for document D and user query Q, respectively, to extract the characteristics of the two, and then splice the two features into the input layer of the MLP three-layer neural network structure to perform nonlinear transformation. Here to discuss the relationship between the two, and then give the classification results. W in the figure represents the word that appears in the document, and Q represents the word that appears in the query. Compared to the CNN model, the RNN model can explicitly factor in word ordering.

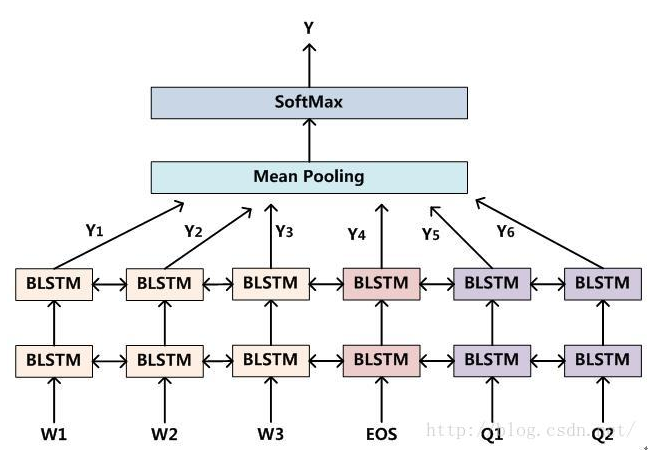

Figure 4 NeuralSearch Structure II

Fig. 4 is the neural network structure of the second possible RNN to solve the semantic search problem. We call it NeuralSearch Model2. The core idea is to link the document D and the user query Q word in sequence as the input of the deep RNN structure, separated by a special separator EOS, and then put on the deep RNN structure above. Mean Pooling is used to collect the voting information of each BLSTM unit, and the final classification result is given by SoftMax. Similarly, W in the figure represents the word that appears in the document, and Q represents the word that appears in the query. This model, in contrast to NeuralSearch Model 1, starts to associate the relationship between words in D and Q at the neural network input level.

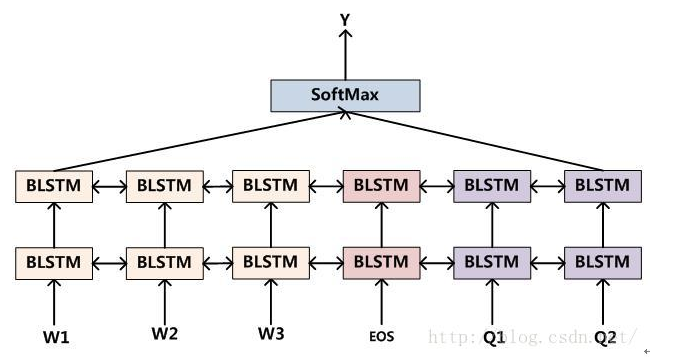

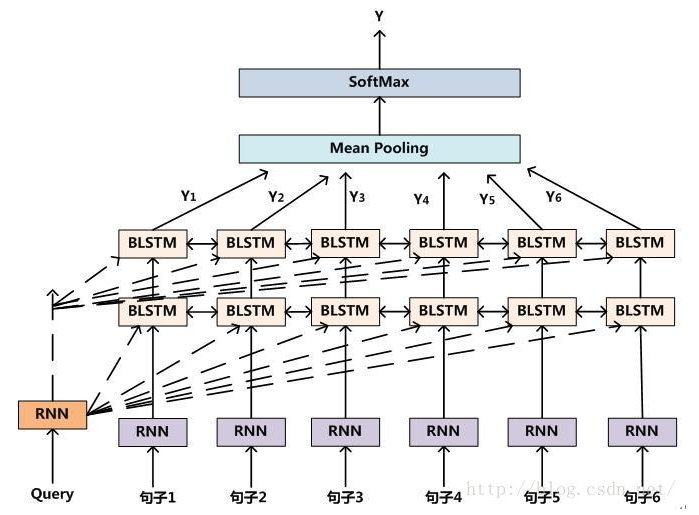

Figure 5 NeuralSearch Structure III

Figure 5 is the third possible neural network structure for RNN to solve the semantic search problem. We call it NeuralSearch Model3. This model is the same as NeuralSearch Model2 at the input layer and following the deep network structure. The difference is that before the final classification, not every BLSTM unit makes a separate vote. Instead, the first node and the tail node of the bidirectional RNN are hidden. The states are stitched together as a basis for the final classification because these two states store the semantic relationship information between the document D and the user query Q.

Our title refers to semantic search, but it seems that we haven't talked about semantics for a long time. In fact, the first simple semantic search model can already explain this problem. As long as the word is executed in the way of Word Embedding, it can already deal with those. Literally different but semantically identical semantic matching problems, that is to say, it must be semantic level matching.

The above three structures lead to the search problem completely as a sentence matching problem. Let's see if we can construct a network structure with search characteristics.

| Treating Search Problems as Atypical RNN Sentence Matching ProblemBecause the search problem is compared with the ordinary sentence matching problem, there is an obvious characteristic: the content of the document is long, and the user's query is short, that is, the information content of the two is very different. On this basis, whether or not typical RNN sentence matching ideas are used to achieve very good results, with certain uncertainties, because the above structure is short in the query and the document is very long. It is unknown whether information will be weakened and whether the LSTM can capture such long-distance relationships.

Then we can further reform the RNN model structure according to the characteristics of the search problem, making the neural network structure more consistent with the characteristics of the search task.

Figure 6 NeuralSearch structure four

Figure 6 is the NeuralSearch structure that is specially adapted to the search scene. We call it NeuralSearch Model4. First, the first neural network first semantically encodes each sentence of the user query and document according to the RNN. The upper layer still adopts the classical deep bidirectional RNN structure. The difference from the previous network structure is that for each BLSTM unit, the input is: It consists of two parts: one is the semantic encoding of Query, and the other is the semantic encoding of a sentence. The two are combined as new input, so that each BLSTM node actually determines the relevance of each sentence and user query, and through the bi-directional RNN structure, all the sentences constituting the document are serialized in sequence, where the RNN structure is Can reflect the order between the sentences. For deeper networks, this process is repeated until the top BLSTM produces output, and the output of each sentence is merged through Mean Pooling, and then the final classification result is generated through SoftMax, which is {1, 2 , 3, 4, 5} in several different related categories. This process can be understood as determining the degree of semantic relevance between the query and each sentence, then each sentence makes a vote, and the semantic relevance of the document as a whole and the query is constructed according to the voting result of each sentence.

It can be seen that the above structure converts the document into a set of sentences, and repeatedly uses the user query in each layer structure, and at the same time repeatedly assembles the user query at each sentence. In this way, on the one hand, an attempt can be made to solve the document and the query. The case where the difference in length is extremely large reinforces the user's query. On the other hand, the idea of ​​expressing the semantics of the query repeatedly between different levels is similar to that between the establishment of input and the deep network intermediate structure of the Residual Network. ShortCut directly connected ideas.

With the above-mentioned several NeuralSearch neural network structures, we can adopt the traditional Learning to rank idea, and use artificially-marked training data to train the neural network. When the neural network is trained, it can be integrated into the search system to implement the semantic search function.

Of course, the above-mentioned RNN structures are searched by myself. I haven’t seen the literature on similar ideas yet, and I don’t have any time or training data to verify their effects. So any of the above The structure is used to solve the search problem is not valid and there is no conclusion. It is very likely that some of these will be effective and may not have any effect. So here we can't make any conclusive assumptions or explanations. We just share ideas that we think might be feasible. Interested students with data can try.

Finally add two sentences, in fact, if students have an understanding of Learning to rank can be seen, Learning to rank contains three models: single document method, document to method and document list method. The above models are basically single-document methods. In fact, we can combine the RNN neural network and document pair method and document list method to build a new Learning to Rank model. I'm not sure if this is already happening. Looks like you haven't seen it yet, but it's obvious that this combination is only a matter of time. And if this combination proves to be effective, it is a relatively large innovation. Therefore, there are a lot of articles that can be done in this piece. If I don't have the data, I don’t have the energy to put it in this piece. Otherwise, the conditions allow, and this direction is worth investing more effort. Go for it.

Lei Feng network (search "Lei Feng network" public concern) Note: This article is transferred from CSDN, reproduced please contact the authorization, and indicate the author and source, not to delete the content.

Tweeter Horn Speaker,High Frequency Driver,Tweeter Speakers Driver,Ferrite Compression Driver

Guangzhou Yuehang Audio Technology Co., Ltd , https://www.yhspeakers.com