We can optimize the neural network architecture to fit the microcontroller's memory and computational limits without compromising accuracy. We will explain and explore the potential of deep separable convolutional neural networks to implement keyword recognition on Cortex-M processors in this paper.

Keyword Identification (KWS) is critical for implementing voice-based user interaction on smart devices, requiring real-time response and high precision to ensure a good user experience. Recently, neural networks have become a popular choice for the KWS architecture because the accuracy of neural networks is superior to traditional speech processing algorithms.

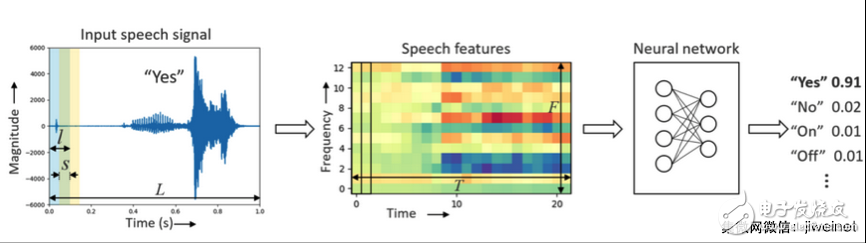

Keyword identification neural network pipeline

The power budget of KWS applications is greatly limited due to the need to remain “always onâ€. While KWS applications can also run on dedicated DSPs or high-performance CPUs, they are better suited to run on Arm Cortex-M microcontrollers, helping to minimize cost, and Arm Cortex-M microcontrollers are often on the edge of the Internet of Things. Used to handle other tasks.

However, to deploy a neural network-based KWS on a Cortex-M-based microcontroller, we face the following challenges:

Limited memory space

A typical Cortex-M system provides up to a few hundred kilobytes of available memory. This means that the entire neural network model, including input/output, weights, and activation, must run within this small memory range.

2. Limited computing resources

Since KWS is always online, this real-time requirement limits the total number of operations per neural network reasoning.

The following is a typical neural network architecture for KWS reasoning:

• Deep Neural Network (DNN)

The DNN is a standard feedforward neural network that is a stack of fully connected layers and nonlinear active layers.

• Convolutional Neural Network (CNN)

A major drawback of DNN-based KWS is the inability to model local area correlation, time domain correlation, and frequency domain correlation in speech functions. The CNN then finds this correlation by treating the input time and frequency domain features as image processing and performing a 2D convolution operation on it.

• Recurrent Neural Network (RNN)

RNN has demonstrated outstanding performance in many sequence modeling tasks, especially in speech recognition, language modeling, and translation. RNN not only discovers the time domain relationship between input signals, but also uses the "gating" mechanism to capture long-term dependencies.

• Convolutional Recurrent Neural Network (CRNN)

The convolutional cyclic neural network is a mixture of CNN and RNN that can be found in local time/space correlation. The CRNN model starts with the convolutional layer, then the RNN, which encodes the signal, followed by the dense fully connected layer.

• Depth Separable Convolutional Neural Network (DS-CNN)

Recently, deep separable convolutional neural networks have been proposed as an efficient alternative to standard 3D convolution operations and have been used to implement a compact network architecture for computer vision.

The DS-CNN first uses a separate 2D filter to perform convolution calculations on each channel in the input feature map, and then uses point-state convolution (ie, 1x1) to merge the outputs in the depth dimension. By integrating standard 3D volume integration into 2D and subsequent 1D, the number of parameters and operations is reduced, making deeper and wider architectures possible, even in resource-constrained microcontroller devices.

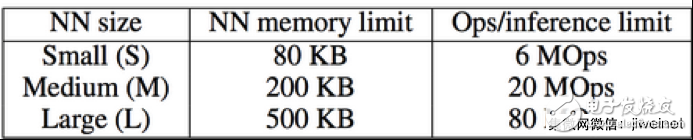

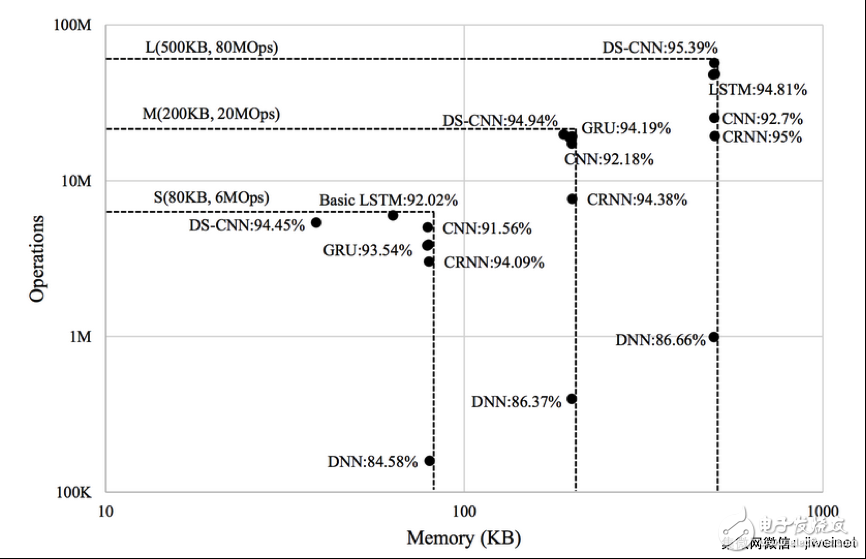

When running keyword recognition on a Cortex-M processor, memory footprint and execution time are the two most important factors that should be considered when designing and optimizing a neural network for that purpose. The three sets of constraints for the neural network shown below are for small, medium, and large Cortex-M systems, based on typical Cortex-M system configurations.

The neural network category (NN) category of the KWS model, assuming 10 inferences per second and 8 bits of weight/activation

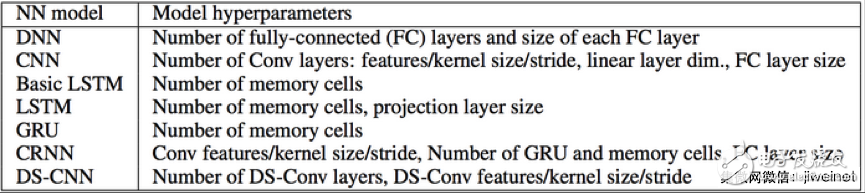

To adjust the model so that it does not exceed the microcontroller's memory and computation limits, a hyperparametric search must be performed. The table below shows the neural network architecture and the corresponding hyperparameters that must be optimized.

Neural network hyperparametric search space

An exhaustive search of feature extraction and neural network model hyperparameters is performed first, and then manual selection is performed to narrow the search space, which are repeatedly performed. The following diagram summarizes the best performance models and corresponding memory requirements and operations for each neural network architecture. The DS-CNN architecture provides the highest precision and requires much lower memory and computational resources.

The relationship between memory and operation/inference in the optimal neural network model

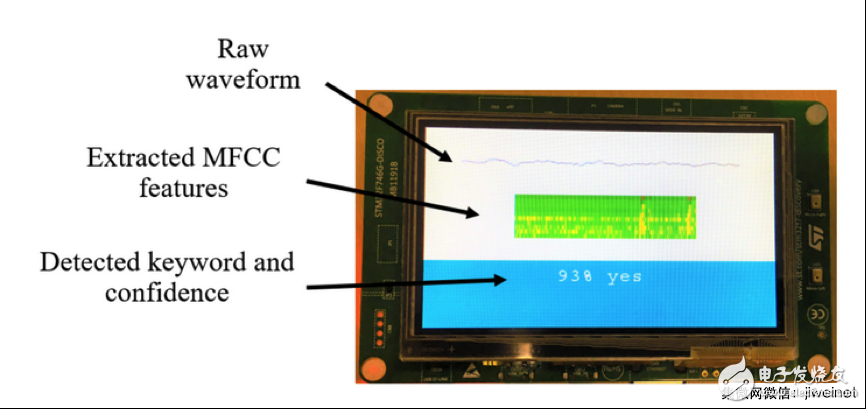

The KWS application is deployed on the Cortex-M7-based STM32F746G-DISCO development board (shown below), using a DNN model with 8-bit weights and 8-bit activation, and KWS performs 10 inferences per second at runtime. Each reasoning (including memory copying, MFCC feature extraction, DNN execution) takes approximately 12 milliseconds. To save power, the microcontroller can be in Waiting for Interrupt (WFI) mode for the rest of the time. The entire KWS application occupies approximately 70 KB of memory, including approximately 66 KB for weight, approximately 1 KB for activation, and approximately 2 KB for audio I/O and MFCC features.

KWS deployment on the Cortex-M7 development board

All in all, the Arm Cortex-M processor achieves high accuracy in keyword recognition applications while limiting memory and computing requirements by adjusting the network architecture. The DS-CNN architecture provides the highest precision and requires much lower memory and computational resources.

Code, model definitions, and pre-training models are available at github.com/ARM-software.

Our new machine learning developer site provides a one-stop resource library, detailed product information and tutorials to help address the challenges of machine learning at the edge of the network.

FTTH Cable Installation Accessories

The FTTH Installation Accessories Set concludes junction box, fiber wall socket(also named Single family unit rosette, SFU rosette), draw hooks, cable clamp, cable wall bushings, cable glands, cable clips, tail duct, cable wiring duct, nail clip.

Cable drawing hooks are made of metal stainless steel in accordance with ASTM A307.hanging hardware, hard material, with splint type and C type for option. The conduit box, pipe joint box single gang or double gang are made of PC material, fire retardant.

Applications:

Span Clamp is attached to the messenger wire at mid span to hold the p-clamps with drop wires

Telecommunications subscriber loop

Fiber to the home (FTTH)

LAN/WAN

CATV

Drop Wire Hanging Holder,Drop Wire Hanging Holder,Cable Pole Clamp,Cable Fitting Clamp are available.

Drop Wire Hanging Holder,Drop Wire Hanging Holder,Cable Pole Clamp,Cable Fitting Clamp

Sijee Optical Communication Technology Co.,Ltd , https://www.sijee-optical.com