Mapreduce is a computing framework. Since it is a framework for computing, the expression is an input. The mapreduce operation inputs the input. The output is defined by the calculation model defined by itself. The output is The results we need.

What we have to learn is the operating rules of this calculation model. When running a mapreduce computing task, the task process is divided into two phases: the map phase and the reduce phase, each of which uses a key-value pair (input) and output (output). What the programmer has to do is define the functions of these two phases: the map function and the reduce function.

Mapreduce programming example 1, data deduplication"Data deduplication" is mainly to grasp and use parallelization ideas to meaningfully filter data. Counting the number of types of data on a large data set, calculating the number of visits from the website log, and so on, seemingly complex tasks involve data deduplication. Let's go into the MapReduce programming of this example.

1.1 Example Description

Deduplicate the data in the data file. Each line in the data file is a single piece of data.

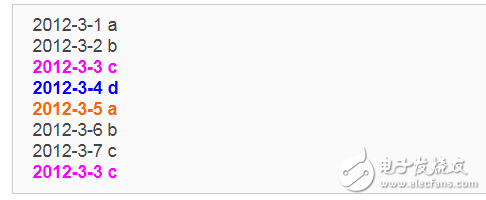

The sample input is as follows:

1) file1:

2) file2:

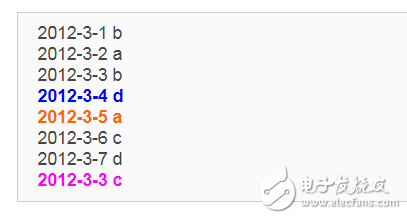

The sample output looks like this:

1.2 Design ideas

The ultimate goal of data deduplication is to have data that appears more than once in the original data appear only once in the output file. We naturally think of giving all the records of the same data to a reduce machine, no matter how many times this data appears, as long as it is output once in the final result. Specifically, the input of reduce should use data as the key, but not the value-list. When reduce receives a "key, value-list", it directly copies the key to the output key and sets the value to a null value.

In the MapReduce process, the output "key, value" of the map is aggregated into "key, value-list" after the shuffle process, and then passed to reduce. Therefore, from the designed reduce input can be reversed, the output key of the map should be data, and the value is arbitrary. Continue to push back, the key of the map output data is data, and in this instance each data represents a line of content in the input file, so the task to be completed in the map phase is to set the value to Hadoop after the default job input method is used. Key, and output directly (any value in the output). The result in the map is passed to reduce after the shuffle process. The reduce phase does not control how many values ​​each key has. It simply copies the input key into the output key and outputs it (the value in the output is set to null).

1.3 program code

The program code is as follows:

Package com.hebut.mr;

Import java.io.IOExcepTIon;

Import org.apache.hadoop.conf.ConfiguraTIon;

Import org.apache.hadoop.fs.Path;

Import org.apache.hadoop.io.IntWritable;

Import org.apache.hadoop.io.Text;

Import org.apache.hadoop.mapreduce.Job;

Import org.apache.hadoop.mapreduce.Mapper;

Import org.apache.hadoop.mapreduce.Reducer;

Import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

Import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

Import org.apache.hadoop.uTIl.GenericOpTIonsParser;

Public class Dedup {

/ / map copy the value in the input to the key of the output data, and directly output

Public static class Map extends Mapper "Object, Text, Text, Text" {

Private static Text line=new Text();//each line of data

/ / Implement the map function

Public void map(Object key,Text value,Context context)

Throws IOException, InterruptedException{

Line=value;

Context.write(line, new Text(""));

}

}

//reduce copies the key in the input to the key of the output data and outputs it directly.

Public static class Reduce extends Reducer "Text, Text, Text, Text" {

/ / Implement the reduce function

Public void reduce(Text key,Iterable"Text" values, Context context)

Throws IOException, InterruptedException{

Context.write(key, new Text(""));

}

}

Public static void main(String[] args) throws Exception{

Configuration conf = new Configuration();

//This sentence is very important

Conf.set("mapred.job.tracker", "192.168.1.2:9001");

String[] ioArgs=new String[]{"dedup_in","dedup_out"};

String[] otherArgs = new GenericOptionsParser(conf, ioArgs).getRemainingArgs();

If (otherArgs.length != 2) {

System.err.println("Usage: Data Deduplication" in "out"");

System.exit(2);

}

Job job = new Job(conf, "Data Deduplication");

job.setJarByClass(Dedup.class);

/ / Set the Map, Combine and Reduce processing classes

job.setMapperClass(Map.class);

job.setCombinerClass(Reduce.class);

job.setReducerClass(Reduce.class);

/ / Set the output type

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(Text.class);

/ / Set the input and output directory

FileInputFormat.addInputPath(job, new Path(otherArgs[0]));

FileOutputFormat.setOutputPath(job, new Path(otherArgs[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

} }

1.4 Code Results

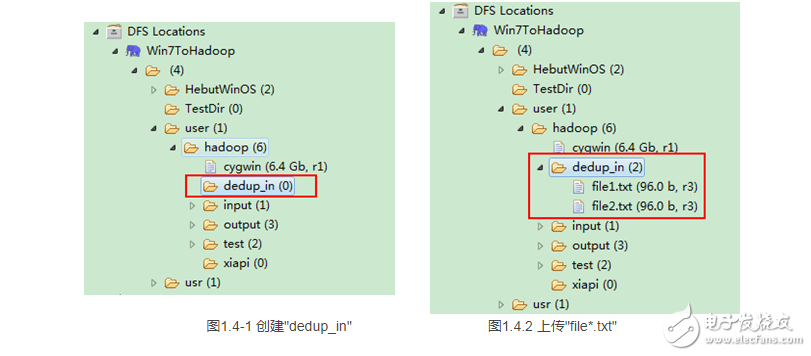

1) Prepare test data

Create the input file "dedup_in" folder in the "/user/hadoop" directory via "DFS Locations" under Eclipse (Note: "dedup_out" does not need to be created.) As shown in Figure 1.4-1, it has been successfully created.

Then create two txt files locally and upload them to the "/user/hadoop/dedup_in" folder via Eclipse. The contents of the two txt files are the same as the "example description" files. As shown in Figure 1.4-2, after successful upload.

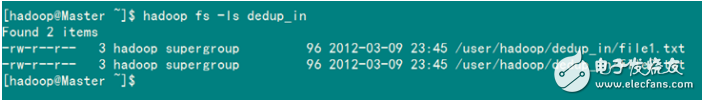

Viewing "Master.Hadoop" from SecureCRT can also confirm the two files we uploaded.

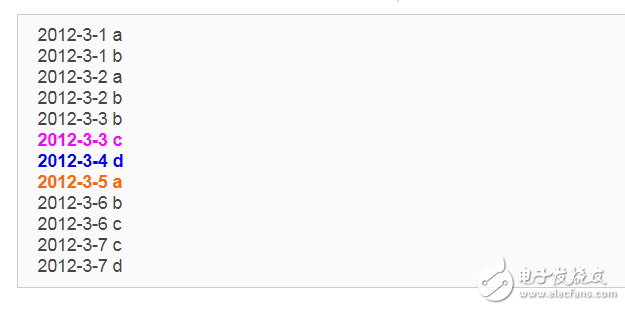

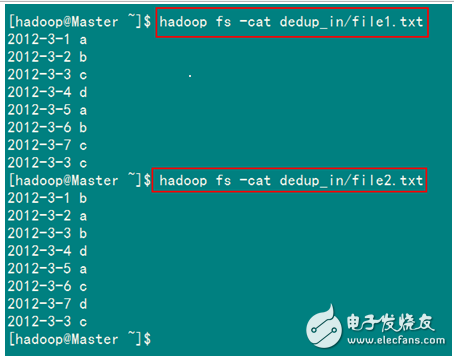

View the contents of the two files as shown in Figure 1.4-3:

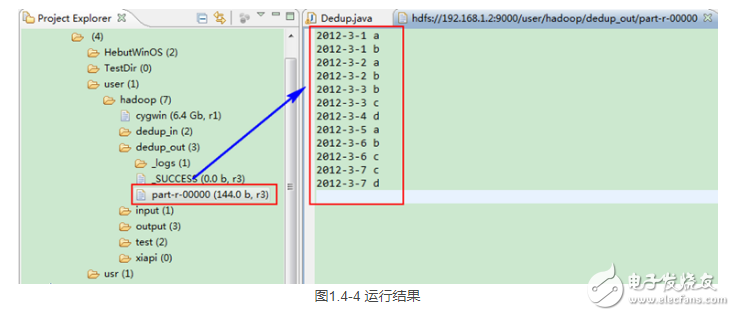

2) View the running results

At this point, we right click on the "/user/hadoop" folder in Eclipse's "DFS Locations" to refresh, then we will find a "dedup_out" folder, and there are 3 files inside, then open the double "part- The r-00000" file will display the contents in the middle of Eclipse. As shown in Figure 1.4-4.

At this point, you can compare the results with what we expected before.

Dual frequency band refers to the characteristic that the same communication device can work in two frequency bands. There are many applications, such as dual-band routers. A dual-band router refers to a router that supports two channels, one 2.4G channel and one 5G channel. Generally, routers are designed to be 2.4GHZ, so there will be mutual interference between signals in the same area. At this time, if you choose the 5.GHZ frequency band mode, it will effectively avoid signal interference in these frequency bands, and wireless signals will be transmitted accordingly. farther.

ONU (Optical Network Unit) optical network unit, ONU is divided into active optical network unit and passive optical network unit. Generally, devices equipped with network monitoring including optical receivers, upstream optical transmitters, and multiple bridge amplifiers are called optical nodes. The PON uses a single optical fiber to connect to the OLT, and then the OLT connects to the ONU. ONU provides services such as data, IPTV (i.e. interactive network television), and voice (using IAD, i.e. Integrated Access Device), truly realizing "triple-play" applications. The ONU has two functions: it selectively receives the broadcast sent by the OLT, and responds to the OLT if the data needs to be received; collects and buffers the Ethernet data that the user needs to send, and sends it to the OLT according to the assigned sending window The end sends the buffered data. The application of ONU can effectively improve the uplink bandwidth utilization of the entire system, and it can also configure the channel bandwidth according to the network application environment and applicable service characteristics to carry as many end users as possible without affecting the communication efficiency and communication quality, and improve the network utilization Rate and reduce user costs.

5.0G WIFI XPON ONU, 2.4G+5.0G WIFI XPON ONU, WIFI XPON ONU with 2.4G and 5.0G, XPON ONU with Dual Band, Dual Band WIFI XPON ONU

Shenzhen GL-COM Technology CO.,LTD. , https://www.szglcom.com